In the realm of Machine Learning, the quest for systems capable of learning from data to tackle real-world problems is incessant. Picture a machine discerning poisonous flowers from harmless ones or engaging in coherent conversations – these scenarios exemplify the potential of Machine Learning. Yet, behind these feats lies a fundamental pillar: mathematics.

From transforming data into comprehensible numerical formats to sculpting the intricate layers of Artificial Neural Networks (ANNs), mathematics orchestrates the symphony of Machine Learning. As we embark on unraveling the essence of mathematics in this domain, we’ll navigate through the foundational principles guiding the development of these intelligent systems.

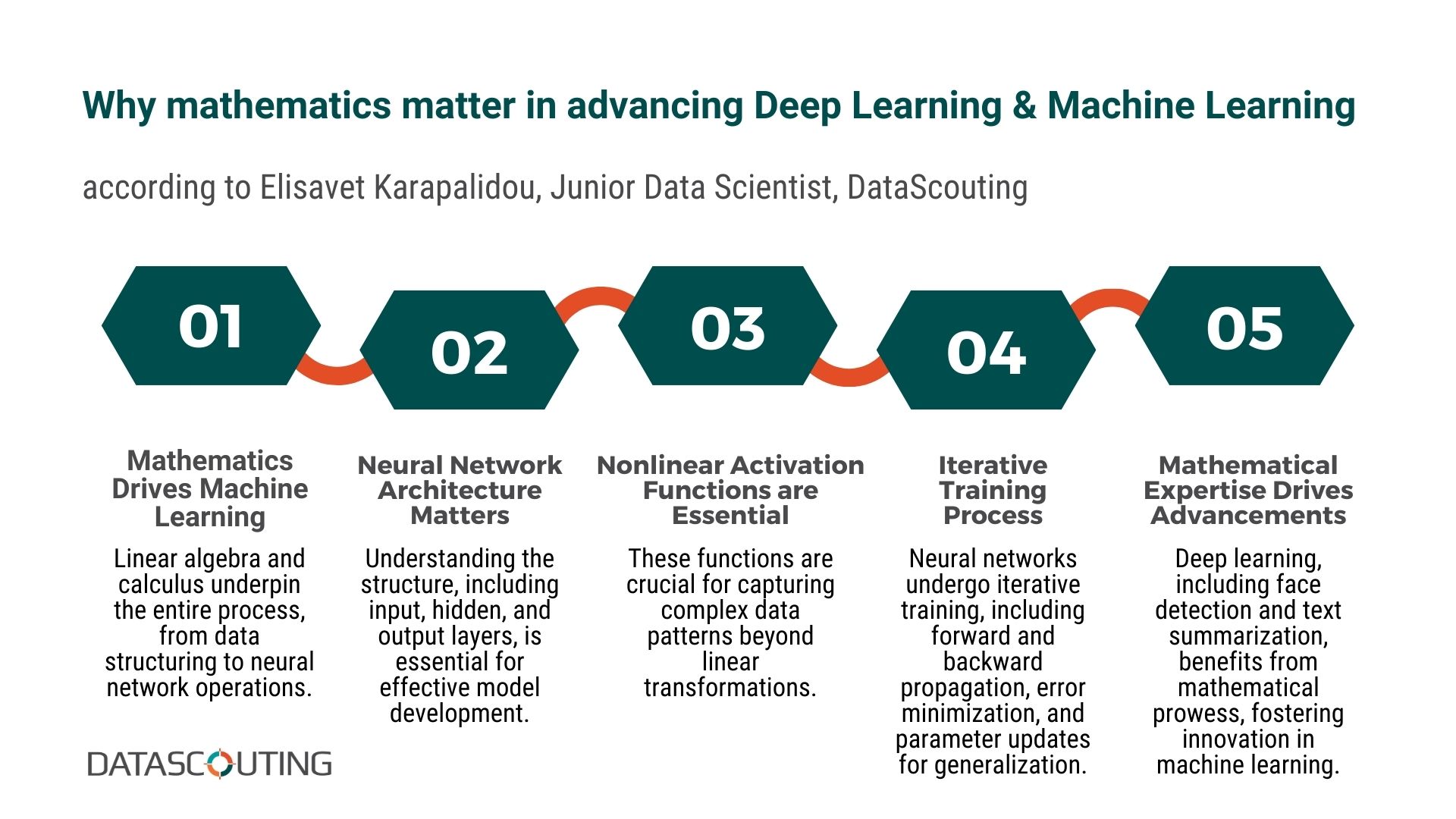

Join us as we delve into the mathematical bedrock of Machine Learning with Elisavet Karapalidou, our Junior Data Scientist, illuminating the pathways that lead to innovation and advancement in this dynamic field.

Q: How important is the knowledge of mathematics for the development of Deep Learning models and Machine Learning in general?

Elisavet: The field of Machine Learning focuses on the development of systems capable of learning through data for the purpose of applying this knowledge on problems relevant to those data. For example, a system exposed to images of poisonous flowers would probably be able to recognize them and warn us accordingly, or a system trained on vast amounts of text data could reach a certain cognitive level enough to hold a conversation or even write poetry.

Even in those two separate cases we encounter the first need for the use of mathematics, namely the restructuring and organization of the data in a way that machines can understand. Although, translating everything to ones and zeros sounds like a pretty extreme undertaking, so we can compromise for the next best thing, which is numbers. Transforming categorical data or videos, images, audio, text etc. into numerical instances is by now a pretty straightforward process that leans more into their symbolic numerical representation instead of the theoretical aspect of mathematics. So let’s assume for now that all of our data records and variables are numerical and we will return to another important part of the data preprocessing with a more solidified mathematical perspective.

A good start to try and answer the initial question would be to go back to the basics. The most prominent architecture when developing a Machine Learning model is the Artificial Neural Network, which in its simplest most conventional form consists of an input layer, an output layer and a hidden layer in-between. The data, which at this point are just numbers, flow through the network by each layer’s nodes and the output layer exports one or multiple numerical values as the model’s result, depending on the problem that the development of the model is trying to process. But what do those values mean and how were they calculated?

Looking further into the design of the above neural network, the input layer is there to introduce each data record to the rest of the successive layers, while the hidden layer constitutes the first instance where the numerical data gets to be processed. Every node of the hidden layer accepts as input all the data record’s values, multiplies each one with a random number, generally called weights, and finally adds them all together with an additional random number called bias. The above process is repeated for every node in the layer and the corresponding mathematical expression is x1*w1+x2*w2+…+xn*wn+b, where xi are all the data record’s feature values, while wi and b are respectively the weights and biases of each node. The exported values of the nodes are fed through the last output layer’s nodes in the same fashion, following the same mathematical expression, with another set of randomly defined weights and biases, before the final result is calculated.

The main task of a neural network is to process the available data and recognize all the statistical rules and hidden mathematical patterns that form the underlying structure of the data that would be impossible to identify following simple techniques of statistical analysis. Therefore, by adjusting the capacity of the network, meaning its number of intermediate hidden layers and nodes, we increase the probability of the network capturing important patterns and acquiring the necessary knowledge for future inference purposes on similar data instances. That is where the term “Deep” in Deep Learning originated, highlighting the use of more hidden layers and the development of deeper networks.

The problem with the above implementation is that all the nodes of the network apply linear transformation to their input, and stacked layers of linear transformations will export a result equivalent to only one linear transformation. Even though this approach would be sufficient for linear data, or even an overkill, it would be unable to identify more complex patterns in non-linear datasets. Thus, a way to mitigate this disadvantage is the application of nonlinear functions, also referred to as activation functions, to the node output of every network layer.

Another type of function connected to the development of a neural network is the loss function, which ties into the result value of the output layer. The purpose of the loss function is to assess the similarity of the result to the ground truth, assuming that it is provided, and inform the network of the calculated error.

The next mathematical process consists of the error propagating backwards through the network layers and the updating of all its weights and biases. Specifically, each parameter’s contribution to the error is calculated in terms of the error gradient with respect to each parameter by the application of the chain rule of calculus. All the parameters are updated based on their contribution following a final round of computations which are implemented by a predetermined algorithm, usually called optimizer, for the sole purpose of minimizing the loss function and consequently the model’s error when making predictions.

All the mathematical steps described above constitute the learning process of the neural network. The time from the moment the first data instance is introduced to the network, until the parameter update, signifies the end of a training step, while the time it takes for all the instances of the dataset to pass through the network and contribute to the minimization of the error, signifies the end of a training epoch. Thus, a successfully trained model has been trained through enough iterations and its parameters have been configured in such a way giving it the capability to generalize well on data similar to the ones that has been exposed to.

It is clear that the complexity of all computational steps cultivates an output that relies on their delicate synergy, as well as the form of the inputs that instigate them. Having a spherical view of the necessary calculations, we can go back to the data transformation to a form better compatible with neural networks. A common practice when preprocessing data is their conversion through a normalization technique, mitigating the disparity between values that comes from the nature different data variables are representing. Different techniques offer different data conversions, although an important note to be made is that their purpose is to impart homogeneity.

Mathematics can provide a deeper comprehension regarding data preprocessing, network architecture, random parameter initialization, functions used and the overall learning process. Nowadays, there are Machine Learning libraries providing all the tools necessary for developing a Deep Learning model in just a few steps, making use of a variety of layer types, model architecture, tried and true activation functions, loss functions and optimizers, each serving a distinct purpose depending on the problem that the model is developing for. However, despite the fact that only a few mathematical concepts of Linear Algebra and Calculus are enough to understand and develop conventional systems, an expanded knowledge is detrimental for those who want to better utilize existing implementations and their complexity, or expect to discover the next best network architecture or custom function, moving forward research endeavors by contributing to better suited techniques for increasing model performance and robustness.

Many state-of-the-art implementations through the years came to be by experimentation and taking advantage of all the intricacies of mathematical possibilities, so it’s safe to assume that new Machine and Deep Learning techniques are not far from being discovered as long as the mathematical knowledge takes the forefront in their development.

Datascouting is a software company that specializes in media intelligence solutions for aiding and supporting PR experts and communication professionals in their endeavors. Its dedicated Research and Development team leverages technological advancement in developing Deep Learning modules and, with a continuous progression of expertise, manages to apply its mathematical knowledge in training, delivering, maintaining and improving specialized and robust models, catering to a variety of Deep Learning applications, such as face detection, entity recognition, text summarization, automatic speech recognition. The ability to integrate custom components into model development assures their successful performance, especially when it is rooted in a solid mathematical foundation.

About Elisavet:

Elisavet holds a Mathematics degree and an MSc in Applied Informatics. She is the latest addition to Datascouting’s R&D team and has an affinity for problem solving and values cooperative efforts. In her 1.5 years of experience, she already served as a university research assistant and collaborated in paper publication.